Can I Build My Own JARVIS? Let’s Find Out.

For years, I’ve been fascinated by AI assistants. They are useful, sure, but they always seem to be missing something. We all want a JARVIS from Iron Man or the computer from Star Trek that just gets us and responds naturally. Of course, I fully realize how absurdly difficult this is. But thinking like a scientist, my goal isn’t success or failure—it’s running experiments, seeing what works, and learning from the outcomes. We’ve all seen the sci-fi dream of a true AI companion, something more like JARVIS from Iron Man than a glorified voice remote. That’s the inspiration behind this project: I want to build a home AI that goes beyond simple automation and becomes a real, intelligent assistant.

This series will document my journey in creating a home AI system from scratch, covering everything from hardware selection to AI model customization and real-world testing. There will be plenty of trial and error, unexpected challenges, and exciting breakthroughs along the way. Whether you're an AI enthusiast, a developer, or just someone curious about where this technology is heading, I hope this series gives you insight into the process of building a smarter assistant.

The Vision: What I Want This AI to Do

Most voice assistants today are limited—they can turn on your lights, play music, or answer basic questions, but they lack real context, memory, and adaptability. My goal is to create an AI that can:

- Understand and remember context across conversations

- Control smart home devices intelligently, not just on command

- Use only local LLMs and prioritize privacy

- Proactively engage in conversations while remaining unobtrusive—this is more than just a technology challenge, and I have a lot of thoughts on it

- I want to build this entirely custom for my home, which will help me cut a lot of corners.

This isn’t about reinventing the wheel. It’s about running experiments, analyzing the results, and learning from them. Whether this works or not, I’ll gain valuable insights into the application of LLMs while using them to build the very thing they power.

Capturing the Vision: My Project Notes

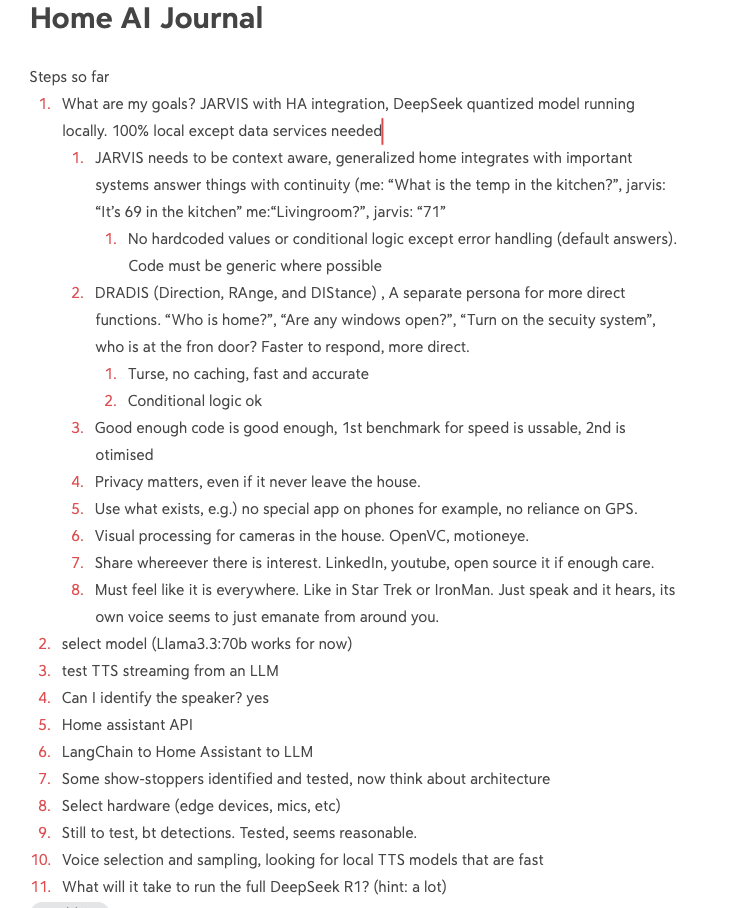

Before diving into the technical details, I outlined my core goals and priorities:

In the spirit of sharing, here are my early notes. Be kind:

Core AI Model and Local Processing

- JARVIS with Home Assistant (HA) integration and a quantized large language model running locally, starting with Llama 3.3:70B, but I’m open to experimenting with other large LLMs (also, if anyone has a spare RTX 5090 lying around, let’s talk)

- 100% local processing for LLMs, with online access only for external data like weather and news

Context Awareness & Interaction

- AI should handle multi-step conversations with continuity

- Example:

- Me: “What is the temp in the kitchen?”

- JARVIS: “It’s 69 in the kitchen”

- Me: “Living room?”

- JARVIS: “71”

- No "hardcoded" responses—except for error handling (e.g., “I’m unable to respond right now”) and a few edge cases. Responses should remain dynamic.

Secondary AI Persona: DRADIS (Direction, Range, and Distance)

- A separate persona for direct, fast-response queries

- Example commands: “Who is home?”, “Are any windows open?”, “Turn on the security system”, “Who is at the front door?”

- Terse responses, optimized for speed and responsiveness

- Conditional logic is acceptable for efficiency

Additional Features & Future Enhancements

- Voice Training & Recognition – AI will recognize family members based on their voice (already working). It will also handle edge cases, such as recognizing guests or unknown voices, and determine appropriate responses based on context. – AI will recognize family members based on their voice (already working)

- Contextual Addressing – Distinguishing between users to provide appropriate responses (e.g., addressing individuals properly in conversations)

- Proactive Engagement – The AI won’t just respond to queries but will initiate conversations based on context (e.g., notifying when someone is at the front door, letting you know it's about to rain if there is a window open)

Guiding Principles

- Privacy-first – Even if the system remains entirely local, security and privacy are key.

- Good enough code is good enough – Priority is usability, with quality code as a secondary step. (The nicest way to say it is that it will be messy.) There are a few things to my benefit that will help with performance, like the speed of my local network.

- Use existing infrastructure – No special phone apps or reliance on GPS. I have home security cameras, but they will remain as such. The system can leverage them, but no additional cameras will be added to support this. They remain, first and foremost, security cameras—not creepy home spies.

- Visual processing – Utilize OpenCV and MotionEye for camera-based analysis

- Share progress – Blog, LinkedIn, YouTube, and potential open-source contributions

- Seamless experience – The AI should feel like it’s everywhere, always listening and responding naturally

- I will fully leverage LLMs' code-writing ability to generate the code.

What’s Coming in This Series

Each post will focus on a different aspect of development, including:

- Choosing the right hardware for an always-on AI and the mistakes I’ve made so far.

- Selecting and augmenting LLMs for natural conversations and the interesting problems that poses, like streaming the text responses back over as spoken audio.

- Building an AI “workflow" that integrates speech, memory, and automation

- Testing real-world interactions and improving performance

- Exploring the challenges and limitations of today’s AI tools

This is a passion project, and like any real innovation, it won’t be a straight path. I’ll be sharing my wins, my failures, and the lessons learned as I push this idea forward.

I’d love to hear your thoughts as this project evolves. What would you want a home AI to do? Let’s explore the possibilities together.

Next up, I’ll dive into defining the vision and key challenges of building an AI assistant that actually feels intelligent, and some work I've already done that made me confident enough to start a blog...

Finally, it won’t be called J.A.R.V.I.S. nor will it sound like Paul Bettany. Don't sue me, Disney.

OK, maybe it will sound a little like Paul...

Comments ()